Create a chatbot that can ingest files

This tutorial shows you how to build a chatbot that can read and answer questions about files you upload, such as meeting notes or job applications.

For example, you could upload a contract and ask, “What are the termination clauses in this agreement?” Or upload a resume and ask, “Does this candidate have experience with marketing analytics?”

The main focus of this tutorial is to show you how to provide files as input to a Langflow flow, so your chatbot can use the content of those files in its responses.

Prerequisites

- Install and start Langflow

- Create a Langflow API key

- Create an OpenAI API key

This tutorial uses an OpenAI LLM. If you want to use a different provider, you need a valid credential for that provider.

Create a flow that accepts file input

To ingest files, your flow must have a Read File component attached to a component that receives input, such as a Prompt Template or Agent component.

The following steps modify the Basic Prompting template to accept file input:

-

In Langflow, click New Flow, and then select the Basic Prompting template.

-

In the Language Model component, enter your OpenAI API key.

If you want to use a different provider or model, edit the Model Provider, Model Name, and API Key fields accordingly.

-

To verify that your API key is valid, click Playground, and then ask the LLM a question. The LLM should respond according to the specifications in the Prompt Template component's Template field.

-

Exit the Playground, and then modify the Prompt Template component to accept file input in addition to chat input. To do this, edit the Template field, and then replace the default prompt with the following text:

_10ChatInput:_10{chat-input}_10File:_10{file}tipYou can use any string to name your template variables. These strings become the names of the fields (input ports) on the Prompt Template component.

For this tutorial, the variables are named after the components that connect to them: chat-input for the Chat Input component and file for the Read File component.

-

Add a Read File component to the flow, and then connect the Raw Content output port to the Prompt Template component's file input port. To connect ports, click and drag from one port to the other.

You can add files directly to the Read File component to pre-load input before running the flow, or you can load files at runtime. The next section of this tutorial covers runtime file uploads.

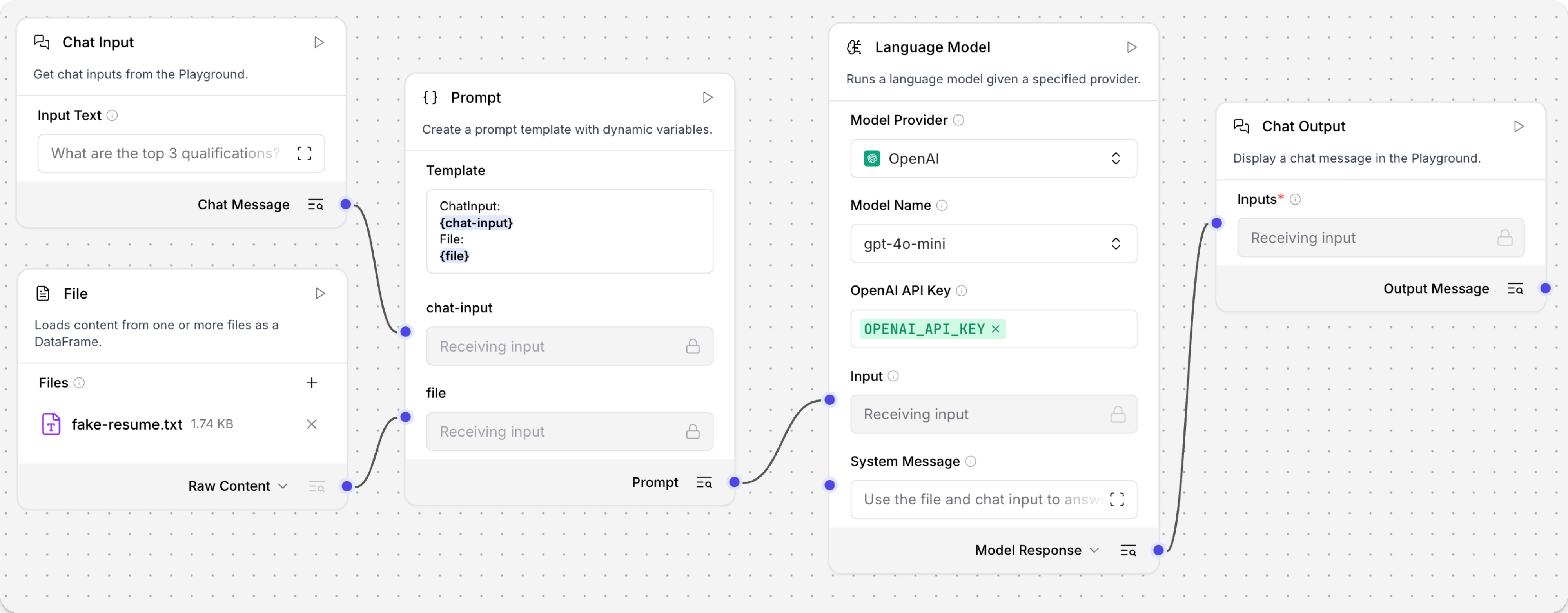

At this point your flow has five components. The Chat Input and Read File components are connected to the Prompt Template component's input ports. Then, the Prompt Template component's output port is connected to the Language Model component's input port. Finally, the Language Model component's output port is connected to the Chat Output component, which returns the final response to the user.

Send requests to your flow from a Python application

This section of the tutorial demonstrates how you can send file input to a flow from an application.

To do this, your application must send a POST /run request to your Langflow server with the file you want to upload and a text prompt.

The result includes the outcome of the flow run and the LLM's response.

This example uses a local Langflow instance, and it asks the LLM to evaluate a sample resume. If you don't have a resume on hand, you can download fake-resume.txt.

For help with constructing file upload requests in Python, JavaScript, and curl, see the Langflow File Upload Utility.

-

To construct the request, gather the following information:

LANGFLOW_SERVER_ADDRESS: Your Langflow server's domain. The default value is127.0.0.1:7860. You can get this value from the code snippets on your flow's API access pane.FLOW_ID: Your flow's UUID or custom endpoint name. You can get this value from the code snippets on your flow's API access pane.FILE_COMPONENT_ID: The UUID of the Read File component in your flow, such asFile-KZP68. To find the component ID, open your flow in Langflow, click the Read File component, and then click Controls. The component ID is at the top of the Controls pane.CHAT_INPUT: The message you want to send to the Chat Input of your flow, such asEvaluate this resume for a job opening in my Marketing department.FILE_NAMEandFILE_PATH: The name and path to the local file that you want to send to your flow.LANGFLOW_API_KEY: A valid Langflow API key.

-

Copy the following script into a Python file, and then replace the placeholders with the information you gathered in the previous step:

_51# Python example using requests_51import requests_51import json_51_51# 1. Set the upload URL_51url = "http://LANGFLOW_SERVER_ADDRESS/api/v2/files/"_51_51# 2. Prepare the file and payload_51payload = {}_51files = [_51('file', ('FILE_PATH', open('FILE_NAME', 'rb'), 'application/octet-stream'))_51]_51headers = {_51'Accept': 'application/json',_51'x-api-key': 'LANGFLOW_API_KEY'_51}_51_51# 3. Upload the file to Langflow_51response = requests.request("POST", url, headers=headers, data=payload, files=files)_51print(response.text)_51_51# 4. Get the uploaded file path from the response_51uploaded_data = response.json()_51uploaded_path = uploaded_data.get('path')_51_51# 5. Call the Langflow run endpoint with the uploaded file path_51run_url = "http://LANGFLOW_SERVER_ADDRESS/api/v1/run/FLOW_ID"_51run_payload = {_51"input_value": "CHAT_INPUT",_51"output_type": "chat",_51"input_type": "chat",_51"tweaks": {_51"FILE_COMPONENT_ID": {_51"path": uploaded_path_51}_51}_51}_51run_headers = {_51'Content-Type': 'application/json',_51'Accept': 'application/json',_51'x-api-key': 'LANGFLOW_API_KEY'_51}_51run_response = requests.post(run_url, headers=run_headers, data=json.dumps(run_payload))_51langflow_data = run_response.json()_51# Output only the message_51message = None_51try:_51message = langflow_data['outputs'][0]['outputs'][0]['results']['message']['data']['text']_51except (KeyError, IndexError, TypeError):_51pass_51print(message)This script contains two requests.

The first request uploads a file, such as

fake-resume.txt, to your Langflow server at the/v2/filesendpoint. This request returns a file path that can be referenced in subsequent Langflow requests, such as02791d46-812f-4988-ab1c-7c430214f8d5/fake-resume.txtThe second request sends a chat message to the Langflow flow at the

/v1/run/endpoint. Thetweaksparameter includes the path to the uploaded file as the variableuploaded_path, and sends this file directly to the Read File component. -

Save and run the script to send the requests and test the flow.

The initial output contains the JSON response object from the file upload endpoint, including the internal path where Langflow stores the file. Then, the LLM retrieves the file and evaluates its content, in this case the suitability of the resume for a job position.

Result

The following is an example response from this tutorial's flow. Due to the nature of LLMs and variations in your inputs, your response might be different.

_23{"id":"793ba3d8-5e7a-4499-8b89-d9a7b6325fee","name":"fake-resume (1)","path":"02791d46-812f-4988-ab1c-7c430214f8d5/fake-resume.txt","size":1779,"provider":null}_23The resume for Emily J. Wilson presents a strong candidate for a position in your Marketing department. Here are some key points to consider:_23_23### Strengths:_231. **Experience**: With over 8 years in marketing, Emily has held progressively responsible positions, culminating in her current role as Marketing Director. This indicates a solid foundation in the field._23_232. **Quantifiable Achievements**: The resume highlights specific accomplishments, such as a 25% increase in brand recognition and a 30% sales increase after launching new product lines. These metrics demonstrate her ability to drive results._23_233. **Diverse Skill Set**: Emily's skills encompass various aspects of marketing, including strategy development, team management, social media marketing, event planning, and data analysis. This versatility can be beneficial in a dynamic marketing environment._23_234. **Educational Background**: Her MBA and a Bachelor's degree in Marketing provide a strong academic foundation, which is often valued in marketing roles._23_235. **Certifications**: The Certified Marketing Professional (CMP) and Google Analytics Certification indicate a commitment to professional development and staying current with industry standards._23_23### Areas for Improvement:_231. **Specificity in Skills**: While the skills listed are relevant, providing examples of how she has applied these skills in her previous roles could strengthen her resume further._23_232. **References**: While stating that references are available upon request is standard, including a couple of testimonials or notable endorsements could enhance credibility._23_233. **Formatting**: Ensure that the resume is visually appealing and easy to read. Clear headings and bullet points help in quickly identifying key information._23_23### Conclusion:_23Overall, Emily J. Wilson's resume reflects a well-rounded marketing professional with a proven track record of success. If her experience aligns with the specific needs of your Marketing department, she could be a valuable addition to your team. Consider inviting her for an interview to further assess her fit for the role.

Next steps

To continue building on this tutorial, try these next steps.

Process multiple files loaded at runtime

To process multiple files in a single flow run, add a separate Read File component for each file you want to ingest. Then, modify your script to upload each file, retrieve each returned file path, and then pass a unique file path to each Read File component ID.

For example, you can modify tweaks to accept multiple Read File components.

The following code is just an example; it isn't working code:

_13## set multiple file paths_13file_paths = {_13 FILE_COMPONENT_1: uploaded_path_1,_13 FILE_COMPONENT_2: uploaded_path_2_13}_13_13def chat_with_flow(input_message, file_paths):_13 """Compare the contents of these two files."""_13 run_url = f"{LANGFLOW_SERVER_ADDRESS}/api/v1/run/{FLOW_ID}"_13 # Prepare tweaks with both file paths_13 tweaks = {}_13 for component_id, file_path in file_paths.items():_13 tweaks[component_id] = {"path": file_path}

You can also use a Directory component to load all files in a directory or pass an archive file to the Read File component.

Upload external files at runtime

To upload files from another machine that isn't your local environment, your Langflow server must first be accessible over the internet. Then, authenticated users can upload files to your public Langflow server's /v2/files/ endpoint, as shown in the tutorial. For more information, see Langflow deployment overview.

Preload files outside the chat session

You can use the Read File component to load files anywhere in a flow, not just in a chat session.

In the visual editor, you can preload files to the Read File component by selecting them from your local machine or Langflow file management.

For example, you can preload an instructions file for a prompt template, or you can preload a vector store with documents that you want to query in a Retrieval Augmented Generation (RAG) flow.

For more information about the Read File component and other data loading components, see the Read File component.