IBM

Bundles contain custom components that support specific third-party integrations with Langflow.

The IBM bundle provides access to IBM watsonx.ai models for text and embedding generation. These components require an IBM watsonx.ai deployment and watsonx API credentials.

IBM watsonx.ai

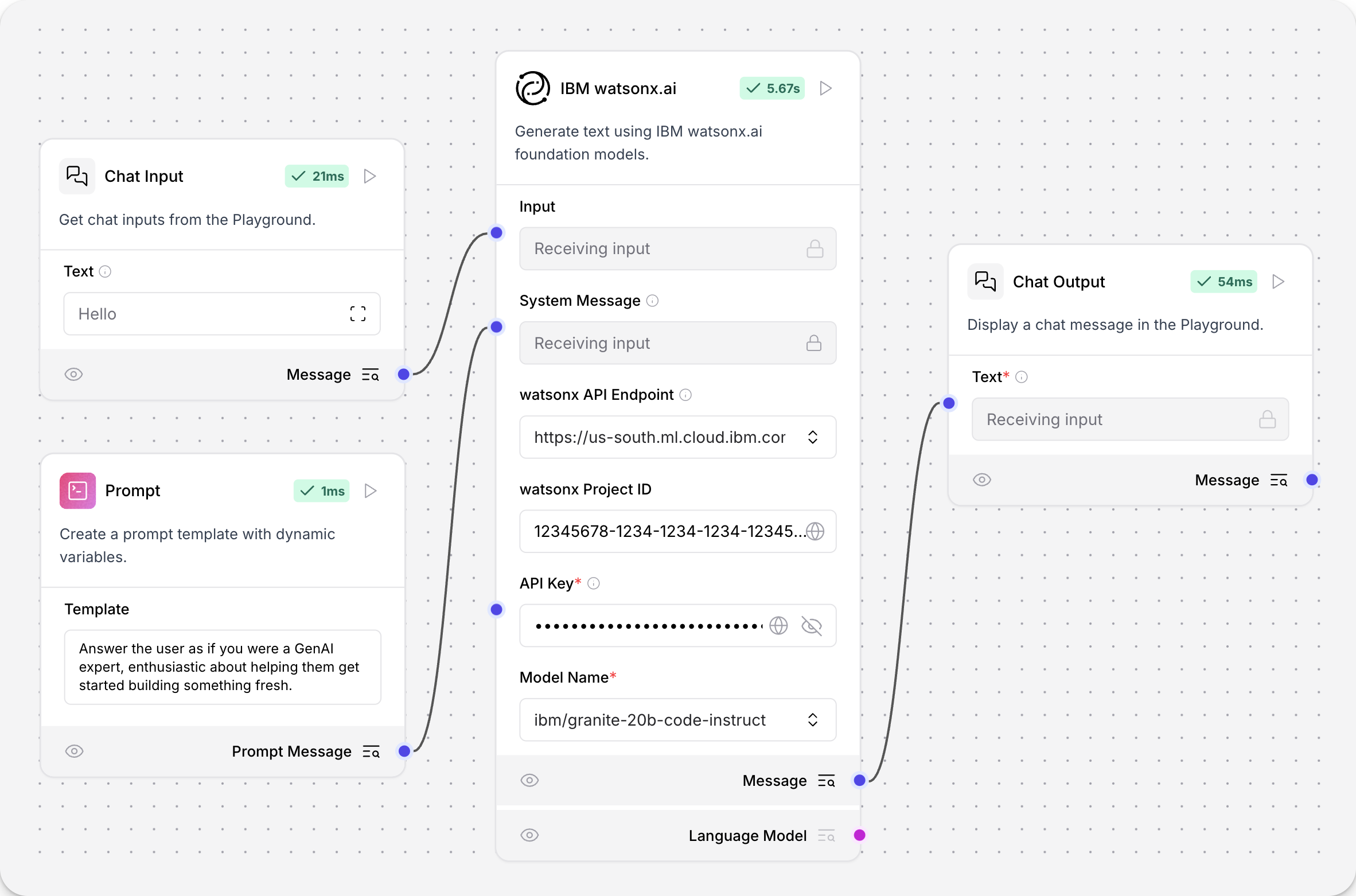

The IBM watsonx.ai component generates text using supported foundation models in IBM watsonx.ai. To use gateway models, use the OpenAI text generation component with the gateway model's OpenAI-compatible endpoint.

You can use the IBM watsonx.ai component anywhere you need a language model in a flow.

IBM watsonx.ai parameters

Some parameters are hidden by default in the visual editor. You can modify all parameters through the Controls in the component's header menu.

| Name | Type | Description |

|---|---|---|

| url | String | Input parameter. The watsonx API base URL for your deployment and region. |

| project_id | String | Input parameter. Your watsonx Project ID. |

| api_key | SecretString | Input parameter. A watsonx API key to authenticate watsonx API access to the specified watsonx.ai deployment and model. |

| model_name | String | Input parameter. The name of the watsonx model to use. Options are dynamically fetched from the API. |

| max_tokens | Integer | Input parameter. The maximum number of tokens to generate. Default: 1000. |

| stop_sequence | String | Input parameter. The sequence where generation should stop. |

| temperature | Float | Input parameter. Controls randomness in the output. Default: 0.1. |

| top_p | Float | Input parameter. Controls nucleus sampling, which limits the model to tokens whose probability is below the top_p value. Range: Default: 0.9. |

| frequency_penalty | Float | Input parameter. Controls frequency penalty. A positive value decreases the probability of repeating tokens, and a negative value increases the probability. Range: Default: 0.5. |

| presence_penalty | Float | Input parameter. Controls presence penalty. A positive value increases the likelihood of new topics being introduced. Default: 0.3. |

| seed | Integer | Input parameter. A random seed for the model. Default: 8. |

| logprobs | Boolean | Input parameter. Whether to return log probabilities of output tokens or not. Default: true. |

| top_logprobs | Integer | Input parameter. The number of most likely tokens to return at each position. Default: 3. |

| logit_bias | String | Input parameter. A JSON string of token IDs to bias or suppress. |

IBM watsonx.ai output

The IBM watsonx.ai component can output either a Model Response (Message) or a Language Model (LanguageModel).

Use the Language Model output when you want to use an IBM watsonx.ai model as the LLM for another LLM-driven component, such as an Agent or Smart Transform component. For more information, see Language model components.

The LanguageModel output from the IBM watsonx.ai component is an instance of [ChatWatsonx](https://docs.langchain.com/oss/python/integrations/chat/ibm_watsonx) configured according to the component's parameters.

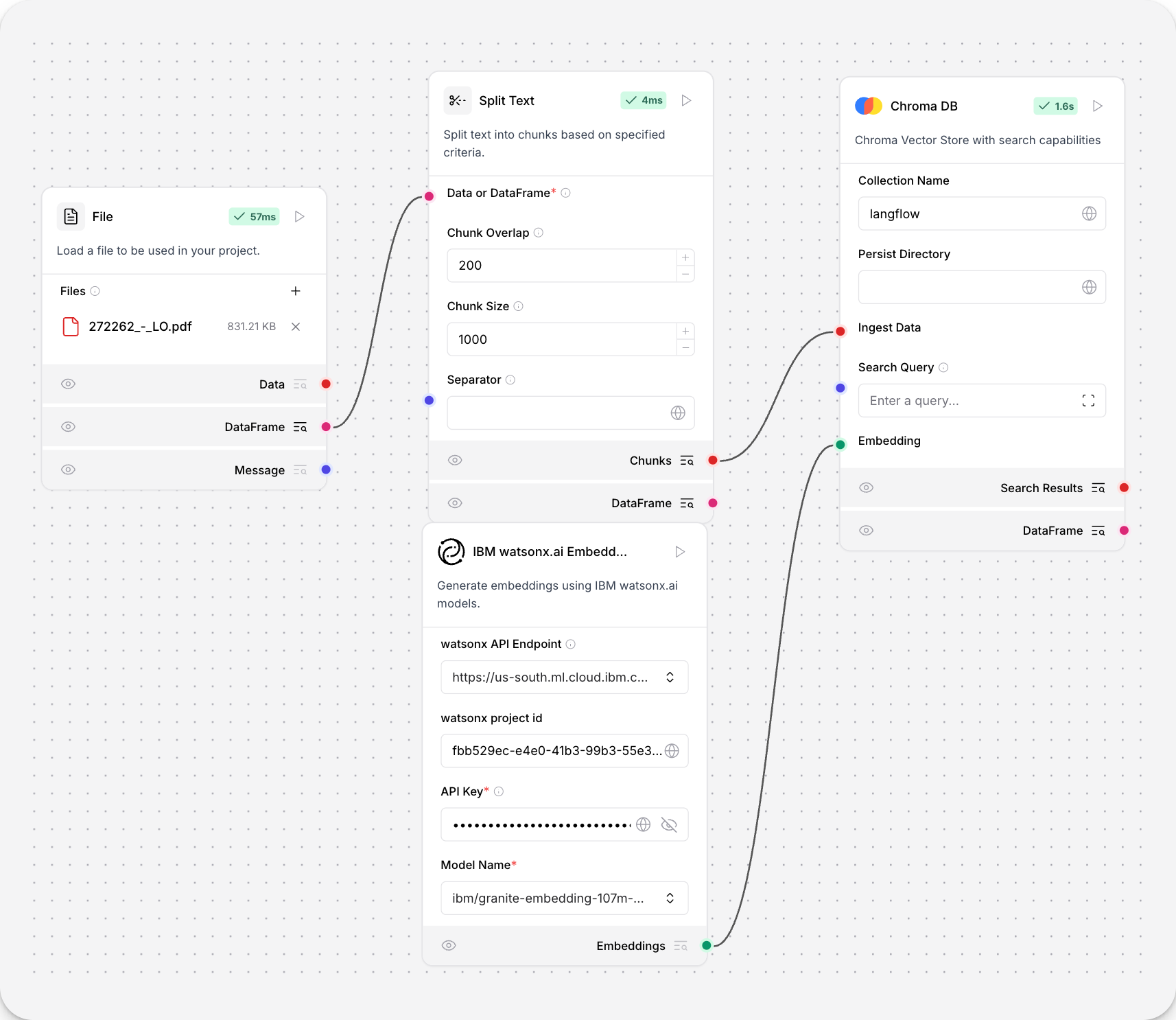

IBM watsonx.ai Embeddings

The IBM watsonx.ai Embeddings component uses the supported foundation models in IBM watsonx.ai for embedding generation.

The output is Embeddings generated with WatsonxEmbeddings.

For more information about using embedding model components in flows, see Embedding model components.

IBM watsonx.ai Embeddings parameters

Some parameters are hidden by default in the visual editor. You can modify all parameters through the Controls in the component's header menu.

| Name | Display Name | Info |

|---|---|---|

| url | watsonx API Endpoint | Input parameter. The watsonx API base URL for your deployment and region. |

| project_id | watsonx project id | Input parameter. Your watsonx Project ID. |

| api_key | API Key | Input parameter. A watsonx API key to authenticate watsonx API access to the specified watsonx.ai deployment and model. |

| model_name | Model Name | Input parameter. The name of the embedding model to use. Supports default embedding models and automatically updates after connecting to your watsonx.ai deployment. |

| truncate_input_tokens | Truncate Input Tokens | Input parameter. The maximum number of tokens to process. Default: 200. |

| input_text | Include the original text in the output | Input parameter. Determines if the original text is included in the output. Default: true. |

Default embedding models

By default, the IBM watsonx.ai Embeddings component supports the following default models:

sentence-transformers/all-minilm-l12-v2: 384-dimensional embeddingsibm/slate-125m-english-rtrvr-v2: 768-dimensional embeddingsibm/slate-30m-english-rtrvr-v2: 768-dimensional embeddingsintfloat/multilingual-e5-large: 1024-dimensional embeddings

After entering your API endpoint and credentials, the component automatically fetches the list of available models from your watsonx.ai deployment.