Ollama

Bundles contain custom components that support specific third-party integrations with Langflow.

This page describes the components that are available in the Ollama bundle.

For more information about Ollama features and functionality used by Ollama components, see the Ollama documentation.

Ollama text generation

This component generates text using Ollama's language models.

To use the Ollama component in a flow, connect Langflow to your locally running Ollama server and select a model:

-

Add the Ollama component to your flow.

-

In the Base URL field, enter the address for your locally running Ollama server.

This value is set as the

OLLAMA_HOSTenvironment variable in Ollama. The default base URL ishttp://127.0.0.1:11434. -

Once the connection is established, select a model in the Model Name field, such as

llama3.2:latest.To refresh the server's list of models, click Refresh.

-

Optional: To configure additional parameters, such as temperature or max tokens, click Controls in the component's header menu.

-

Connect the Ollama component to other components in the flow, depending on how you want to use the model.

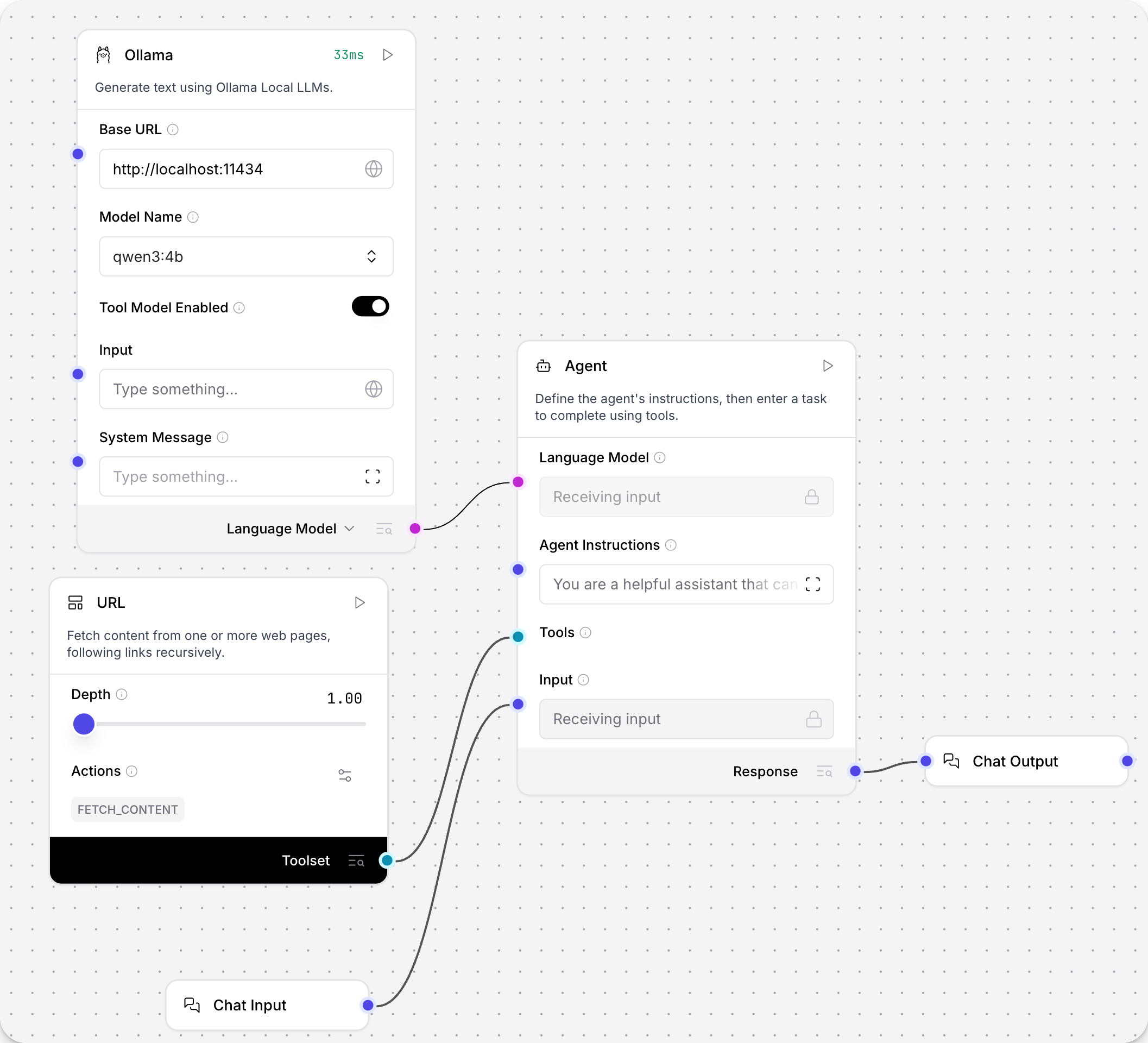

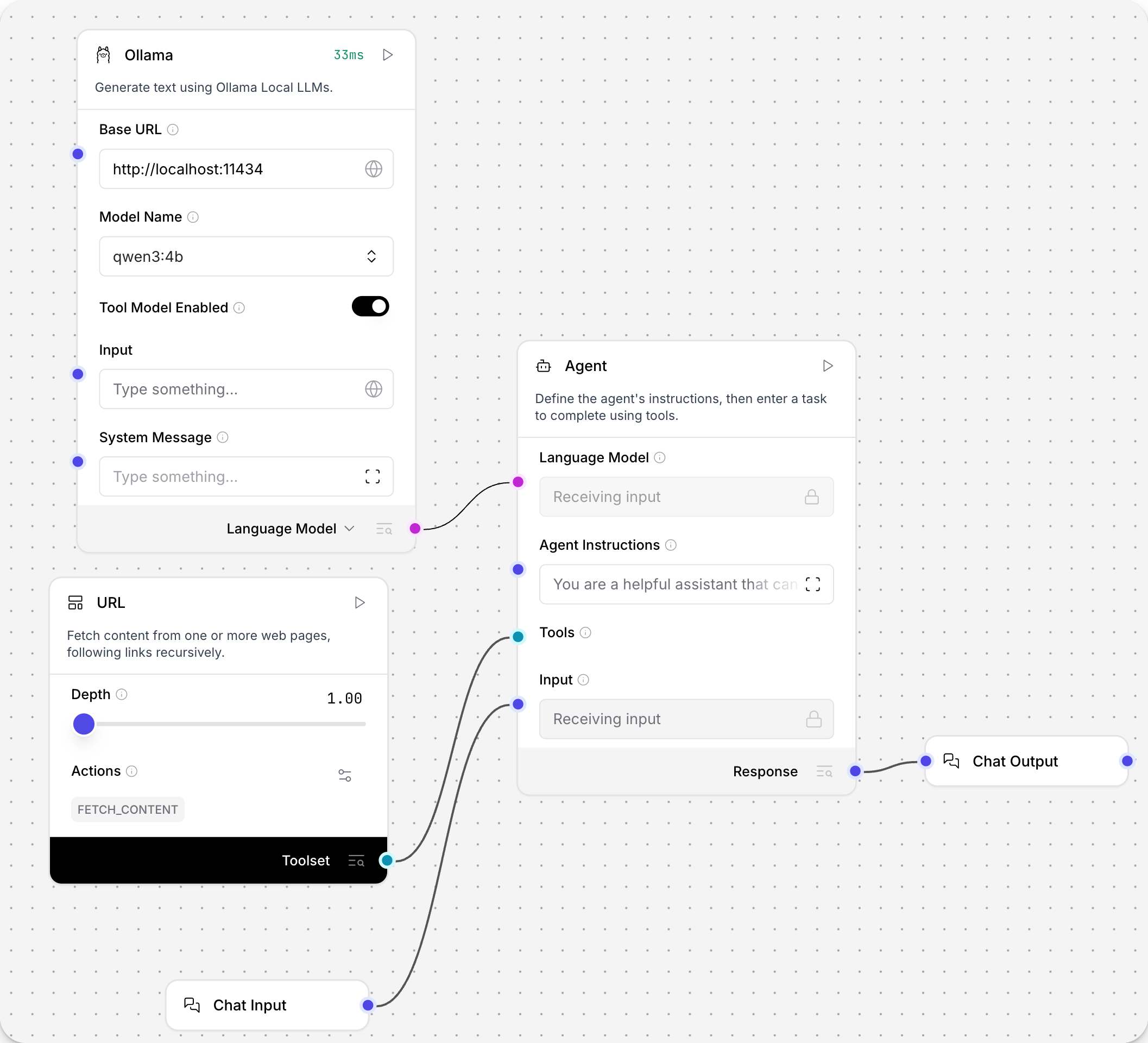

Language model components can output either a Model Response (

Message) or a Language Model (LanguageModel). Use the Language Model output when you want to use an Ollama model as the LLM for another LLM-driven component, such as an Agent or Smart Transform component. For more information, see Language model components.In the following example, the flow uses

LanguageModeloutput to use an Ollama model as the LLM for an Agent component.

Ollama Embeddings

The Ollama Embeddings component generates embeddings using Ollama embedding models.

To use this component in a flow, connect Langflow to your locally running Ollama server and select an embeddings model:

-

Add the Ollama Embeddings component to your flow.

-

In the Ollama Base URL field, enter the address for your locally running Ollama server.

This value is set as the

OLLAMA_HOSTenvironment variable in Ollama. The default base URL ishttp://127.0.0.1:11434. -

Once the connection is established, select a model in the Ollama Model field, such as

all-minilm:latest.To refresh the server's list of models, click Refresh.

-

Optional: To configure additional parameters, such as temperature or max tokens, click Controls in the component's header menu. Available parameters depend on the selected model.

-

Connect the Ollama Embeddings component to other components in the flow. For more information about using embedding model components in flows, see Embedding model components.

This example connects the Ollama Embeddings component to generate embeddings for text chunks extracted from a PDF file, and then stores the embeddings and chunks in a Chroma DB vector store.