Basic Prompting

warning

This page may contain outdated information. It will be updated as soon as possible.

Prompts serve as the inputs to a large language model (LLM), acting as the interface between human instructions and computational tasks.

By submitting natural language requests in a prompt to an LLM, you can obtain answers, generate text, and solve problems.

This article demonstrates how to use Langflow's prompt tools to issue basic prompts to an LLM, and how various prompting strategies can affect your outcomes.

Prerequisites

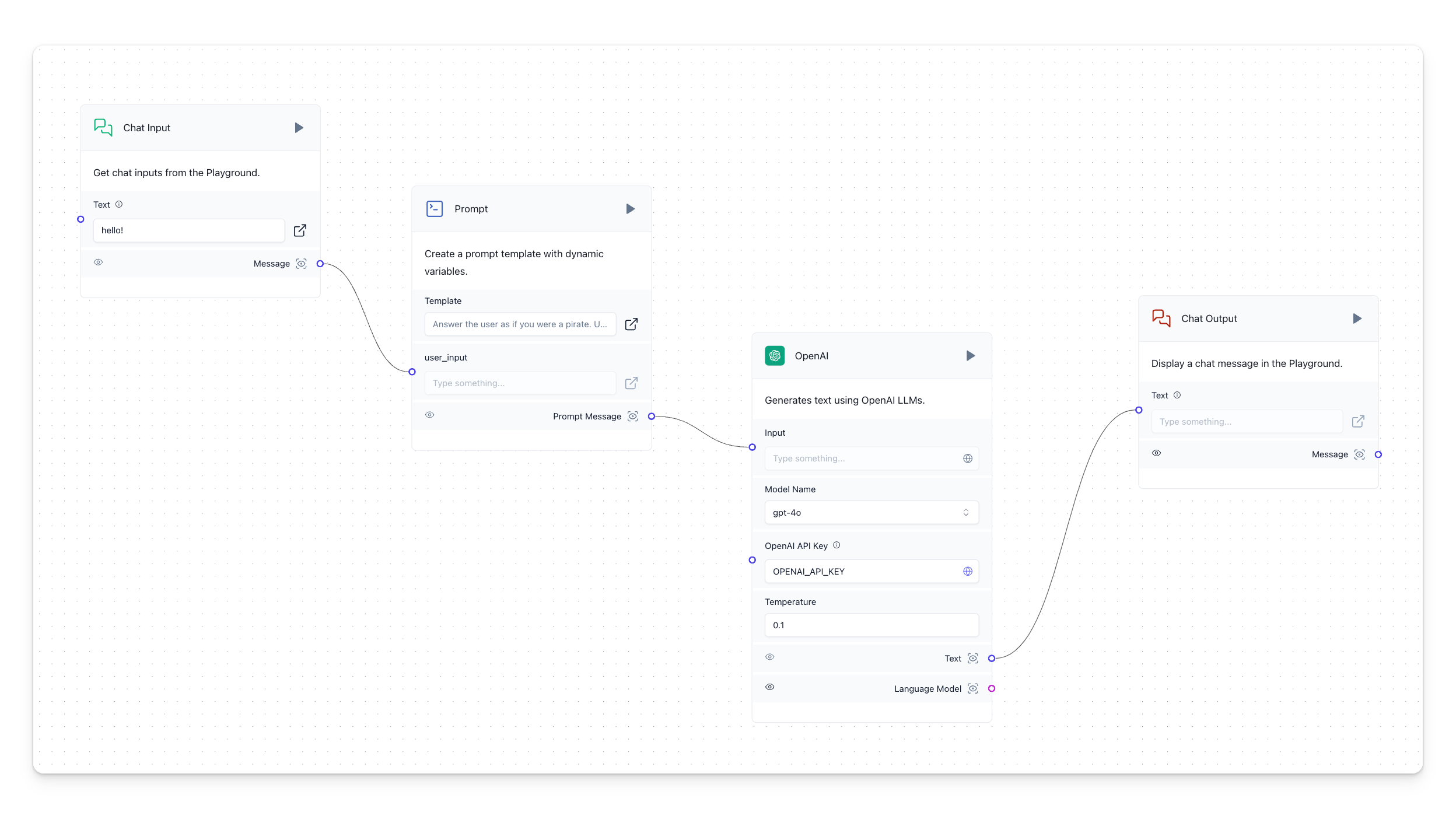

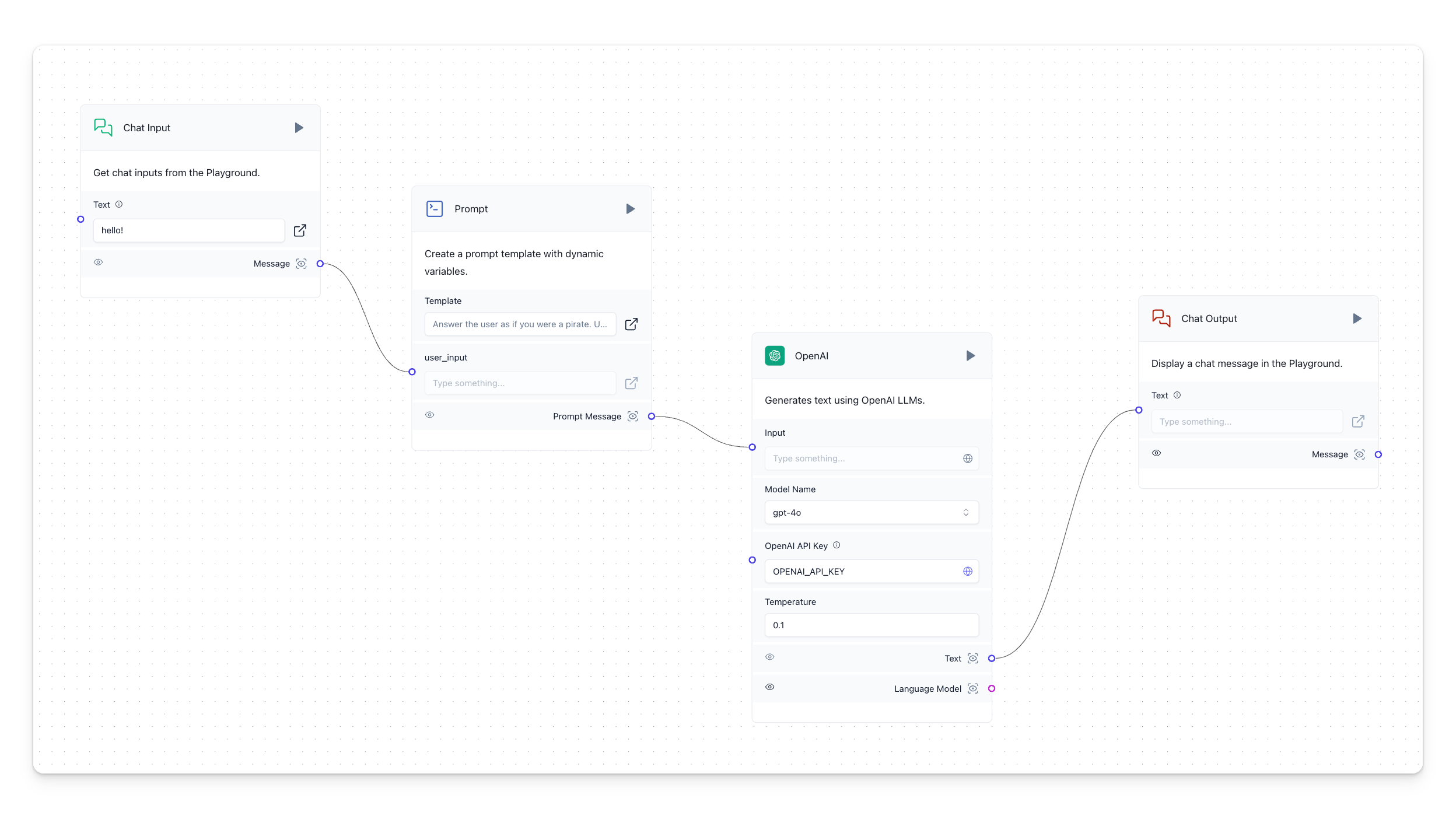

Create the basic prompting project

- From the Langflow dashboard, click New Project.

- Select Basic Prompting.

- The Basic Prompting flow is created.

This flow allows you to chat with the OpenAI component via a Prompt component.

Examine the Prompt component. The Template field instructs the LLM to Answer the user as if you were a pirate.

This should be interesting...

- To create an environment variable for the OpenAI component, in the OpenAI API Key field, click the Globe button, and then click Add New Variable.

- In the Variable Name field, enter

openai_api_key. - In the Value field, paste your OpenAI API Key (

sk-...). - Click Save Variable.

- In the Variable Name field, enter

Run the basic prompting flow

- Click the Run button. The Interaction Panel opens, where you can converse with your bot.

- Type a message and press Enter. The bot responds in a markedly piratical manner!

Modify the prompt for a different result

- To modify your prompt results, in the Prompt template, click the Template field. The Edit Prompt window opens.

- Change

Answer the user as if you were a pirateto a different character, perhapsAnswer the user as if you were Harold Abelson. - Run the basic prompting flow again. The response will be markedly different.