Vector Store RAG

This page may contain outdated information. It will be updated as soon as possible.

Retrieval Augmented Generation, or RAG, is a pattern for training LLMs on your data and querying it.

RAG is backed by a vector store, a vector database which stores embeddings of the ingested data.

This enables vector search, a more powerful and context-aware search.

We've chosen Astra DB as the vector database for this starter project, but you can follow along with any of Langflow's vector database options.

Prerequisites

-

An Astra DB vector database created with:

- Application token (

AstraCS:WSnyFUhRxsrg…) - API endpoint (

https://ASTRA_DB_ID-ASTRA_DB_REGION.apps.astra.datastax.com)

- Application token (

Create the vector store RAG project

- From the Langflow dashboard, click New Project.

- Select Vector Store RAG.

- The Vector Store RAG flow is created.

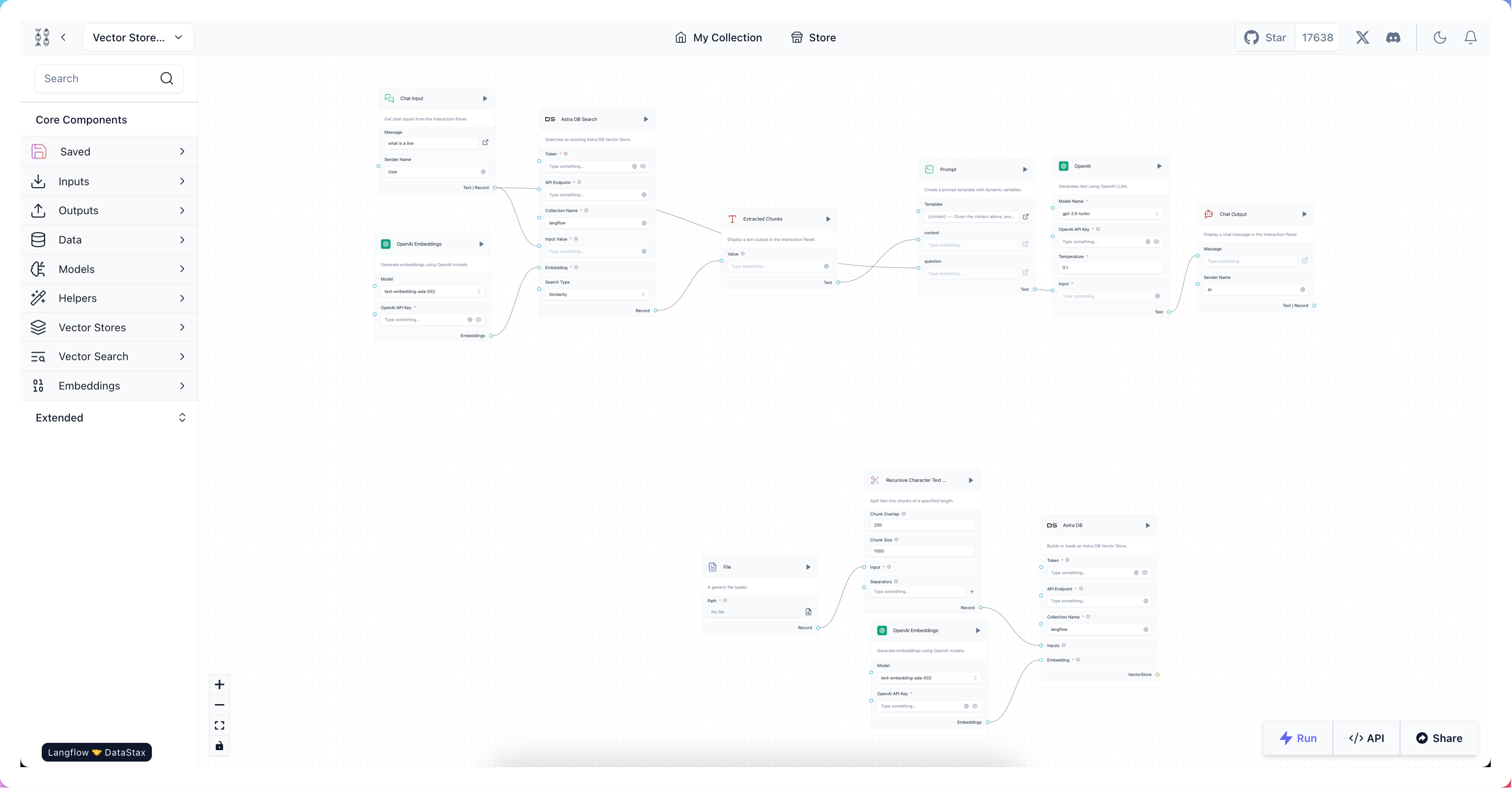

The vector store RAG flow is built of two separate flows.

The ingestion flow (bottom of the screen) populates the vector store with data from a local file. It ingests data from a file (File), splits it into chunks (Recursive Character Text Splitter), indexes it in Astra DB (Astra DB), and computes embeddings for the chunks (OpenAI Embeddings). This forms a "brain" for the query flow.

The query flow (top of the screen) allows users to chat with the embedded vector store data. It's a little more complex:

- Chat Input component defines where to put the user input coming from the Playground.

- OpenAI Embeddings component generates embeddings from the user input.

- Astra DB Search component retrieves the most relevant Data from the Astra DB database.

- Text Output component turns the Data into Text by concatenating them and also displays it in the Playground.

- Prompt component takes in the user input and the retrieved Data as text and builds a prompt for the OpenAI model.

- OpenAI component generates a response to the prompt.

- Chat Output component displays the response in the Playground.

-

To create an environment variable for the OpenAI component, in the OpenAI API Key field, click the Globe button, and then click Add New Variable.

- In the Variable Name field, enter

openai_api_key. - In the Value field, paste your OpenAI API Key (

sk-...). - Click Save Variable.

- In the Variable Name field, enter

-

To create environment variables for the Astra DB and Astra DB Search components:

- In the Token field, click the Globe button, and then click Add New Variable.

- In the Variable Name field, enter

astra_token. - In the Value field, paste your Astra application token (

AstraCS:WSnyFUhRxsrg…). - Click Save Variable.

- Repeat the above steps for the API Endpoint field, pasting your Astra API Endpoint instead (

https://ASTRA_DB_ID-ASTRA_DB_REGION.apps.astra.datastax.com). - Add the global variable to both the Astra DB and Astra DB Search components.

Run the vector store RAG flow

- Click the Playground button. The Playground opens, where you can chat with your data.

- Type a message and press Enter. (Try something like "What topics do you know about?")

- The bot will respond with a summary of the data you've embedded.

For example, we embedded a PDF of an engine maintenance manual and asked, "How do I change the oil?" The bot responds:

_10To change the oil in the engine, follow these steps:_10_10Make sure the engine is turned off and cool before starting._10_10Locate the oil drain plug on the bottom of the engine._10_10Place a drain pan underneath the oil drain plug to catch the old oil...

We can also get more specific:

_10User_10What size wrench should I use to remove the oil drain cap?_10_10AI_10You should use a 3/8 inch wrench to remove the oil drain cap.

This is the size the engine manual lists as well. This confirms our flow works, because the query returns the unique knowledge we embedded from the Astra vector store.